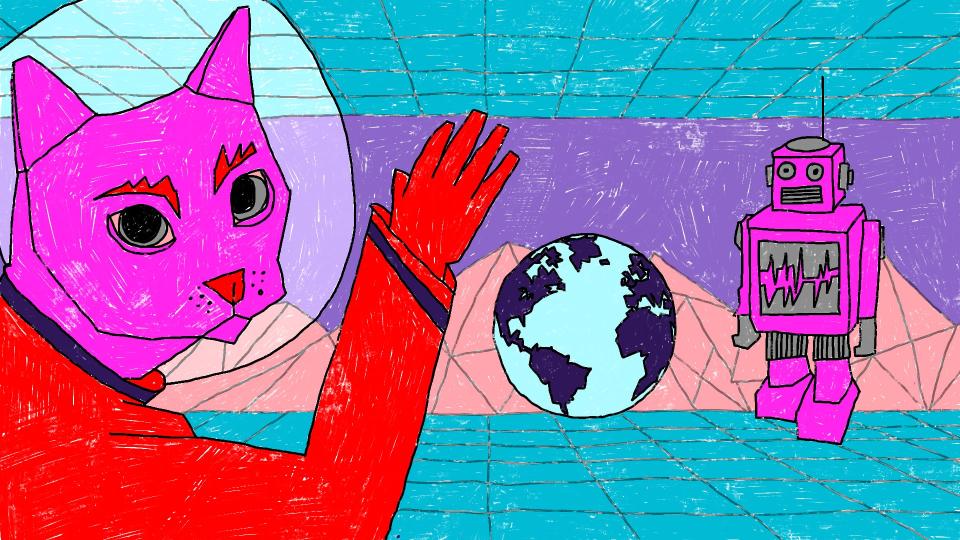

Illustration by Rawand Issa for GenderIT.

This edition of GenderIT came together at a time of daily breaking news around artificial intelligence (AI) and the serious risks of bias, misalignment, governance challenges, job loss, and human extinction.

We are not the first generation to panic about the social implications and governance of new tech. In the late 16th century, Queen Elizabeth I denied a patent to the inventor of a new automated knitting machine for fear it would affect “young maidens who obtain their daily bread by knitting.” In 1859, French poet and critic Charles Baudelaire called photography “art's most mortal enemy.”

But we are undoubtedly facing the fastest-developing technological advancements in human history, and it’s never been particularly useful to position oneself against technological development. Recent calls to pause AI development were rendered symbolic and drowned by a more dominant “race to AGI” frenzy.

Keeping up with the news and filtering out the hype isn’t easy. Amid the AI debates, most women experts are tending towards denying the futuristic, existential threats of AI and calling for addressing existing, documented biases in algorithms, data sets, and training models right now. Most of the expertise and resources to unpack AI threats are limited to Western institutions and academia. For us in the Global South who are activists and organisers, these are terrific and challenging opportunities to take stock of our movements and rethink how we participate in technology governance that influences our lives.

For a region like the MENA (which some authors refer to as S/WANA, others as Arabic-speaking countries), tech policy problems are compounded with a litany of daily struggles, most devastating of these being occupation, war, conflict, and displacement which affects, we sometimes forget, two billion people - a quarter of the world’s population. People Like Us are often, sadly, irrelevant to or tokenized in global policy. In this edition of GenderIT, we have sought to challenge this, building upon the important work happening in the region right now.

For us in the Global South who are activists and organisers, these are terrific and challenging opportunities to take stock of our movements and rethink how we participate in technology governance that influences our lives.

In Cairo, the Access to Knowledge for Development Center (A2K4D) serves as the MENA hub of the A+ alliance Feminist AI research project, building a network of multidisciplinary stakeholders to grapple with this unprecedented pace of technological change against a backdrop of growing multifaceted inequalities in MENA, especially with regards to gender. They’re also part of the Fairwork Project, working to set and measure decent work standards in the platform economy, including its impact on domestic work. These are all urgent and important areas of work and, in this issue, we will explore more of the thinking around safety and freedom amid significant economic changes.

Tunisian researcher, Afef Abrougui, writes about the perils of AI-enhanced technologies deployed by authoritarian regimes in the region towards increased surveillance and predictive policing. She raises important questions about the readiness of civil society in the MENA to respond to these dangers and what is needed to preserve privacy and human rights in the coming years.

AI expert, Nour Naim from Gaza, Palestine, details the particular challenges of gender and racial bias in algorithms and datasets. In her piece, she presents an overview of important and emerging trends in the movement for trustworthy AI, as well as activist responses to these inequalities.

In my article, I invite you to use this AI hype to think of its economic undercurrent, surveillance capitalism, as equally urgent. Thinking about AI needs futurology, but thinking about surveillance economies requires history. I make the argument that radical movements, feminists especially, have been captured by this new economy and that a migration from data platforms is necessary to movement-building right now.

Open-source advocate from Jordan, Raya Sharbain, makes the case for digital self-defence to support sexual rights and reproductive justice movements amidst growing crackdowns and threats. She elaborates on use of secure and protective technologies to share and access information critical to feminist movements.

People Like Us are often, sadly, irrelevant to or tokenized in global policy.

Journalist, Yara el Murr, from Lebanon presents a case study of kotobli, a book discovery platform that counters colonial algorithms used in searching for books about the SWANA region. She details how Big Tech favours publishers and authors from the Global North, and presents the challenges and opportunities of working with local publishers and authors to change this.

Habash from Egypt explores the relationship between feminism and blockchain technologies in a curious and critical review of possibilities emergent in DAOs and DisCos. They accompany us through several iterations and thought experiments on political organisation and theory in the realms of web3.

The beautiful illustrations accompanying this issue were drawn by Rawand Issa, who, in the process, reflected on how generative AI impacts her own field of visual art and the design market. We discussed and explored tools like Stable Diffusion together and, while Rawand opted not to use AI in her work, we organically started referring to non-AI as the “old” way. There was something about the cliche and chaos of diffusion, its stereotypical generations, that Rawand felt was less inspiring and more controlling, antithetical to the creative visualisation process, and feeling more like an “online visual dump,” reminding her of a movie scene where “robots go to a dead robots dump and try to find better parts for their bodies.”

Reading through the articles, Rawand felt a curiosity coupled with anxiety for the future of design, that these tools may be imposed by the market in a way similar to the expensive Adobe Suite that was nearly impossible to resist. Her feelings were reflected in the illustrations, which became a series of rooms in a cyberpunk world. Each illustration tells the story of a different anxiety using classical references like The Matrix, Space Odyssey, the Mortal Kombat video game, and Athena the goddess and protectress, with symbolism inspired from our feminist and queer communities.

Add new comment